In today’s data-driven world, organizations are generating and collecting vast amounts of information from diverse sources. This data, often referred to as “big data,” presents both significant opportunities and challenges.

To effectively manage, process, and analyze big data, organizations rely on specialized software and hardware systems known as big data platforms. This comprehensive guide will delve into the intricacies of big data platforms, exploring their features, benefits, common architectures, and the key technologies that underpin them.

What is Big Data?

Before diving into the specifics of big data platforms, it’s crucial to understand what constitutes big data. While there’s no universally agreed-upon definition, big data is typically characterized by the “Five Vs”:

- Volume: The sheer quantity of data is massive, often measured in terabytes, petabytes, or even exabytes.

- Velocity: Data streams in at high speed, requiring real-time or near real-time processing capabilities.

- Variety: Data comes in diverse formats, including structured (e.g., relational databases), semi-structured (e.g., JSON, XML), and unstructured (e.g., text, images, videos).

- Veracity: The quality and accuracy of data can vary significantly, requiring data cleansing and validation processes.

- Value: The ultimate goal is to extract valuable insights and knowledge from the data, enabling better decision-making and improved business outcomes.

The convergence of these characteristics distinguishes big data from traditional data processing approaches. Traditional systems struggle to handle the scale, speed, and complexity of big data, necessitating the development of specialized platforms.

The Need for Big Data Platforms

The proliferation of big data has created a critical need for platforms that can effectively address its challenges. Big data platforms offer several key benefits:

- Scalability: They can handle massive volumes of data and scale horizontally to accommodate growing data needs.

- Performance: They provide high-performance processing capabilities, enabling faster insights and quicker decision-making.

- Flexibility: They support a wide range of data formats and processing paradigms, allowing organizations to analyze diverse datasets.

- Cost-effectiveness: They often leverage open-source technologies and cloud-based infrastructure, reducing the total cost of ownership.

- Advanced Analytics: They integrate with advanced analytics tools and techniques, such as machine learning and data mining, enabling deeper insights and predictive modeling.

Without a robust big data platform, organizations risk being overwhelmed by the sheer volume and complexity of their data, missing out on valuable insights and competitive advantages.

Key Components of a Big Data Platform

A typical big data platform comprises several key components that work together to manage, process, and analyze data. These components include:

- Data Ingestion: Mechanisms for collecting data from various sources, such as databases, applications, sensors, and social media feeds. This often involves tools like Apache Kafka, Apache Flume, and Apache Sqoop.

- Data Storage: A scalable and fault-tolerant storage system for storing massive amounts of data. Common options include Hadoop Distributed File System (HDFS), cloud-based object storage (e.g., Amazon S3, Azure Blob Storage), and NoSQL databases.

- Data Processing: Frameworks for processing data in batch or real-time. Popular choices include Apache Hadoop (MapReduce), Apache Spark, Apache Flink, and Apache Storm.

- Data Governance and Security: Mechanisms for ensuring data quality, security, and compliance with regulatory requirements. This includes data lineage tracking, access control, and encryption.

- Data Analytics and Visualization: Tools for analyzing data and presenting insights in a user-friendly format. This can include SQL-based query engines (e.g., Apache Hive, Apache Impala), data visualization tools (e.g., Tableau, Power BI), and machine learning libraries (e.g., scikit-learn, TensorFlow).

The specific components and technologies used in a big data platform will vary depending on the organization’s needs and use cases.

Popular Big Data Technologies

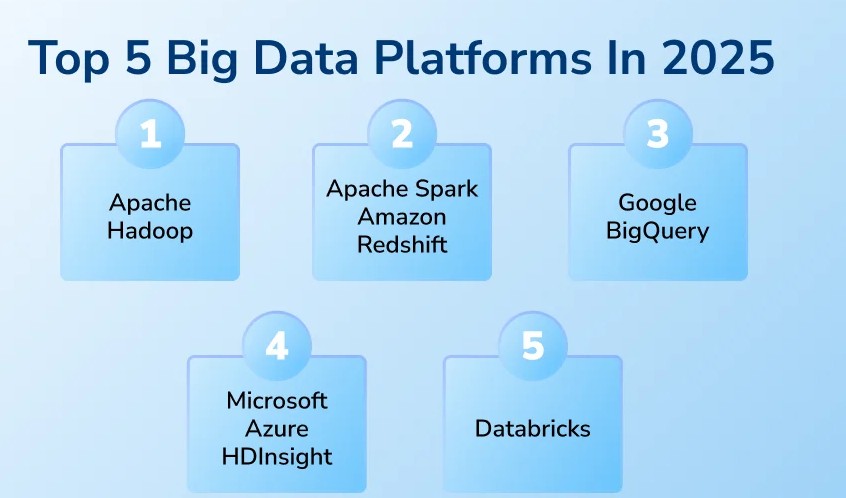

Several key technologies underpin modern big data platforms. Understanding these technologies is essential for designing and implementing effective big data solutions.

Apache Hadoop

Apache Hadoop is an open-source framework for distributed storage and processing of large datasets. It consists of two core components:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across a cluster of commodity hardware. HDFS provides high fault tolerance and scalability.

- MapReduce: A programming model for processing large datasets in parallel. MapReduce breaks down processing tasks into smaller units that can be executed concurrently across the cluster.

While Hadoop was initially the dominant big data processing framework, it has been increasingly complemented by other technologies like Spark, which offer improved performance for many workloads.

Apache Spark

Apache Spark is a fast and general-purpose cluster computing system for big data processing. It offers significant performance advantages over Hadoop MapReduce for many workloads, particularly iterative and interactive processing tasks. Spark’s key features include:

- In-Memory Processing: Spark can cache data in memory, reducing the need to read data from disk repeatedly.

- Support for Multiple Programming Languages: Spark supports Java, Scala, Python, and R, allowing developers to use their preferred language.

- Rich Set of Libraries: Spark provides libraries for SQL, machine learning, graph processing, and streaming.

- Real-Time Processing: Spark Streaming enables real-time data processing from sources like Kafka and Flume.

Spark has become a widely adopted big data processing engine, powering a wide range of applications, from data analytics to machine learning.

Apache Kafka

Apache Kafka is a distributed streaming platform for building real-time data pipelines and streaming applications. It provides high-throughput, fault-tolerance, and scalability. Kafka’s key features include:

- Publish-Subscribe Messaging: Kafka acts as a central hub for publishing and subscribing to data streams.

- Durable Storage: Kafka stores data streams persistently, allowing consumers to replay data as needed.

- Real-Time Processing: Kafka enables real-time processing of data streams using stream processing frameworks like Spark Streaming and Flink.

- Scalability and Fault Tolerance: Kafka is designed to scale horizontally and tolerate failures.

Kafka is commonly used for building real-time data pipelines, event-driven architectures, and streaming analytics applications.

NoSQL Databases

NoSQL (Not Only SQL) databases are non-relational databases that are designed to handle large volumes of unstructured and semi-structured data. They offer greater flexibility and scalability compared to traditional relational databases. Common types of NoSQL databases include:

- Key-Value Stores: (e.g., Redis, Memcached) Store data as key-value pairs, providing fast lookups.

- Document Databases: (e.g., MongoDB, Couchbase) Store data as JSON-like documents, allowing for flexible data schemas.

- Column-Family Stores: (e.g., Cassandra, HBase) Store data in columns rather than rows, optimizing for read-heavy workloads.

- Graph Databases: (e.g., Neo4j) Store data as nodes and relationships, ideal for analyzing complex relationships between entities.

NoSQL databases are often used in big data applications where scalability, flexibility, and performance are critical.

Cloud Computing

Cloud computing has revolutionized big data platforms by providing on-demand access to scalable infrastructure and managed services. Cloud-based big data platforms offer several advantages:

- Scalability: Cloud providers offer virtually unlimited scalability, allowing organizations to easily scale their big data infrastructure as needed.

- Cost-effectiveness: Cloud-based services can be more cost-effective than on-premises infrastructure, as organizations only pay for the resources they use.

- Managed Services: Cloud providers offer managed big data services, such as Hadoop clusters, Spark clusters, and NoSQL databases, reducing the operational burden on organizations.

- Global Reach: Cloud providers have data centers around the world, allowing organizations to deploy big data platforms closer to their data sources and users.

Popular cloud-based big data platforms include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Big Data Platform Architectures

There are several common architectures for building big data platforms, each with its own strengths and weaknesses. Some of the most popular architectures include:

Hadoop-Based Architecture

This architecture is based on Apache Hadoop and its ecosystem of tools. It typically involves using HDFS for data storage and MapReduce for data processing. Hadoop-based architectures are well-suited for batch processing of large datasets.

Components: HDFS, MapReduce, Hive, Pig, Sqoop, Flume, Oozie

Strengths: Scalability, fault tolerance, cost-effectiveness

Weaknesses: Performance limitations for iterative and interactive workloads, complexity of MapReduce programming

Spark-Based Architecture

This architecture leverages Apache Spark for data processing. Spark-based architectures offer improved performance compared to Hadoop-based architectures, particularly for iterative and interactive workloads. They often integrate with other technologies like Hadoop, Kafka, and NoSQL databases.

Components: Spark Core, Spark SQL, Spark Streaming, MLlib, GraphX, Hadoop (HDFS), Kafka, NoSQL Databases

Strengths: High performance, support for multiple programming languages, rich set of libraries

Weaknesses: Requires more memory than Hadoop, can be more complex to configure and manage

Lambda Architecture

The Lambda architecture is a hybrid approach that combines batch processing and stream processing. It maintains two parallel processing pipelines: a batch layer that processes all data and a speed layer that processes recent data in real-time. The results from the two layers are combined to provide a complete view of the data.

Components: Batch Layer (Hadoop, Spark), Speed Layer (Spark Streaming, Flink, Storm), Serving Layer (NoSQL Databases)

Strengths: Provides both real-time and batch processing capabilities, handles data updates and corrections effectively

Weaknesses: Can be complex to implement and maintain, requires managing two separate processing pipelines

Kappa Architecture

The Kappa architecture simplifies the Lambda architecture by eliminating the batch layer. All data is processed through a single stream processing pipeline. When corrections or updates are needed, the entire data stream is replayed through the pipeline.

Components: Stream Processing Engine (Spark Streaming, Flink, Kafka Streams), Message Queue (Kafka)

Strengths: Simpler than Lambda architecture, easier to maintain

Weaknesses: Requires the ability to replay the entire data stream, may not be suitable for all use cases

Data Lake Architecture

A data lake is a centralized repository for storing data in its raw, unprocessed format. It allows organizations to store vast amounts of data from diverse sources, without the need to predefine a schema. Data can then be processed and analyzed on demand, using various tools and techniques.

Components: Object Storage (Amazon S3, Azure Blob Storage, Google Cloud Storage), Data Ingestion Tools (Kafka, Flume, Sqoop), Data Processing Engines (Spark, Hadoop), Data Governance Tools

Strengths: Flexibility, scalability, supports diverse data formats

Weaknesses: Requires strong data governance to ensure data quality and security, can be challenging to manage

Use Cases for Big Data Platforms

Big data platforms are used in a wide range of industries and applications. Some common use cases include:

Customer Analytics

Big data platforms can be used to analyze customer data from various sources, such as website traffic, social media activity, and purchase history, to gain insights into customer behavior, preferences, and needs. This information can be used to improve customer service, personalize marketing campaigns, and develop new products and services.

Fraud Detection

Big data platforms can be used to detect fraudulent transactions and activities by analyzing large volumes of data in real-time. This can help organizations prevent financial losses and protect their customers.

Predictive Maintenance

Big data platforms can be used to predict equipment failures and schedule maintenance proactively. This can help organizations reduce downtime, improve efficiency, and extend the lifespan of their assets.

Supply Chain Optimization

Big data platforms can be used to optimize supply chain operations by analyzing data from various sources, such as inventory levels, transportation costs, and demand forecasts. This can help organizations reduce costs, improve efficiency, and respond quickly to changing market conditions.

Personalized Healthcare

Big data platforms can be used to personalize healthcare treatments by analyzing patient data, such as medical history, genetic information, and lifestyle factors. This can help doctors make more informed decisions and improve patient outcomes.

Financial Modeling and Risk Management

Financial institutions utilize big data platforms for complex financial modeling, risk assessment, and regulatory compliance. They can analyze massive datasets to identify market trends, assess credit risk, and detect potential fraud.

Retail and E-commerce Optimization

Retailers and e-commerce businesses use big data platforms to optimize pricing strategies, personalize product recommendations, manage inventory effectively, and improve the overall customer experience. Analyzing sales data, website behavior, and customer demographics allows them to tailor offerings and promotions.

Manufacturing Process Improvement

Manufacturers leverage big data platforms to monitor production processes, identify bottlenecks, predict equipment failures, and optimize resource allocation. This leads to increased efficiency, reduced waste, and improved product quality.

Building a Big Data Platform: Key Considerations

Building a big data platform is a complex undertaking that requires careful planning and execution. Some key considerations include:

Defining Clear Business Objectives

Before embarking on a big data project, it’s crucial to define clear business objectives and identify the specific problems you’re trying to solve. This will help you determine the appropriate technologies, architecture, and resources needed.

Choosing the Right Technologies

Selecting the right technologies for your big data platform is critical to its success. Consider your specific requirements, such as data volume, velocity, variety, and the types of analytics you need to perform. Evaluate different technologies and choose the ones that best meet your needs.

Designing a Scalable and Fault-Tolerant Architecture

Your big data platform should be designed to scale horizontally to accommodate growing data needs. It should also be fault-tolerant, so that it can continue to operate even if some components fail.

Implementing Strong Data Governance

Data governance is essential for ensuring data quality, security, and compliance. Implement policies and procedures for data ingestion, storage, processing, and access control. Use data lineage tracking to understand the origin and flow of data.

Securing Your Data

Big data platforms often contain sensitive information, so it’s crucial to implement strong security measures to protect your data from unauthorized access. This includes encrypting data at rest and in transit, implementing access control policies, and monitoring for security threats.

Investing in Skilled Resources

Building and managing a big data platform requires specialized skills and expertise. Invest in training and hiring skilled data engineers, data scientists, and data analysts.

Starting Small and Iterating

It’s often best to start with a small pilot project and iterate based on your experiences. This allows you to learn and adapt as you go, and avoid costly mistakes.

The Future of Big Data Platforms

Big data platforms are constantly evolving, driven by advancements in technology and changing business needs. Some key trends shaping the future of big data platforms include:

Cloud-Native Architectures

Increasingly, big data platforms are being built using cloud-native architectures, which leverage the scalability, flexibility, and cost-effectiveness of cloud computing. This includes using containerization technologies like Docker and Kubernetes, and serverless computing platforms.

AI and Machine Learning Integration

AI and machine learning are becoming increasingly integrated into big data platforms. This allows organizations to automate data processing tasks, improve data quality, and generate more sophisticated insights.

Real-Time Data Processing

The demand for real-time data processing is growing rapidly. Big data platforms are evolving to support real-time data ingestion, processing, and analytics, enabling organizations to make faster and more informed decisions.

Edge Computing

Edge computing involves processing data closer to the source, reducing latency and bandwidth requirements. Big data platforms are being extended to support edge computing scenarios, enabling organizations to analyze data from sensors, devices, and other edge sources in real-time.

Data Mesh

Data mesh is a decentralized approach to data management that empowers domain teams to own and manage their own data products. This can improve data quality, agility, and innovation.

Increased Automation

Automation is becoming increasingly important for managing big data platforms. Tools are emerging to automate tasks such as data ingestion, data processing, and data governance.

Focus on Data Quality and Trust

As big data becomes more critical to business decision-making, the focus on data quality and trust is increasing. Organizations are investing in tools and processes to ensure that their data is accurate, complete, and reliable.

Conclusion

Big data platforms are essential for organizations that want to harness the power of their data. By understanding the key components, technologies, and architectures of big data platforms, organizations can build solutions that meet their specific needs and achieve their business objectives.

As big data platforms continue to evolve, it’s important to stay abreast of the latest trends and technologies to ensure that your platform remains effective and competitive. The future of big data is bright, and organizations that embrace it will be well-positioned to succeed in the data-driven world.